Choose risk-first compliance that’s always on, built for you.

Go back to blogs

AI Compliance: Meaning, Regulations, Challenges

Last updated on

June 5, 2025

17

min. read

AI is quietly running the show behind the scenes. It picks what you see on your feed, answers your support tickets, and even helps decide who gets approved for a loan. But with so much power in its hands, one thing becomes clear. Someone needs to make sure it follows the rules.

That is where AI compliance comes in.

More businesses are turning to generative AI for regulatory compliance, using it to write policies, check controls, and keep up with ever-changing laws. And it is picking up fast. According to McKinsey’s latest State of AI report, 71 percent of companies now use generative AI regularly in at least one part of the business. Among these, risk and compliance are some of the top functions where generative AI is being adopted.

The catch? Many of these companies are still figuring out how to use AI in a way that is fair, explainable, and aligned with upcoming regulations, standards, and frameworks like the EU AI Act, ISO 42001, and NIST’s AI RMF.

In this blog, we will break down what AI compliance really means, which rules matter most, and the everyday challenges businesses face while trying to stay compliant.

What is AI compliance?

AI compliance means making sure artificial intelligence systems follow laws, industry rules, and ethical standards. It is not just about whether the AI works — it is about whether it works fairly, safely, and in a way that respects people’s rights.

Imagine an AI tool that scans job applications. AI compliance would ensure that it does not filter out resumes based on someone’s gender or age. Or consider a chatbot that collects personal data. Compliance means that data is protected and only used in ways the user agreed to.

In short, AI compliance puts up guardrails to help companies use AI responsibly. It helps prevent harm, reduce risk, and build trust with users.

This involves checking both what the AI system does and how it does it. For example:

- Making sure the training data is accurate, legal, and as free from bias as possible

- Explaining how the AI made a decision, especially in sensitive areas like lending or hiring

- Keeping the system secure and preventing misuse

- Being open about where and how AI is being used

As AI rules tighten across the world, from the EU to the US and India, companies cannot afford to treat compliance as an afterthought. It is quickly becoming essential for doing business the right way.

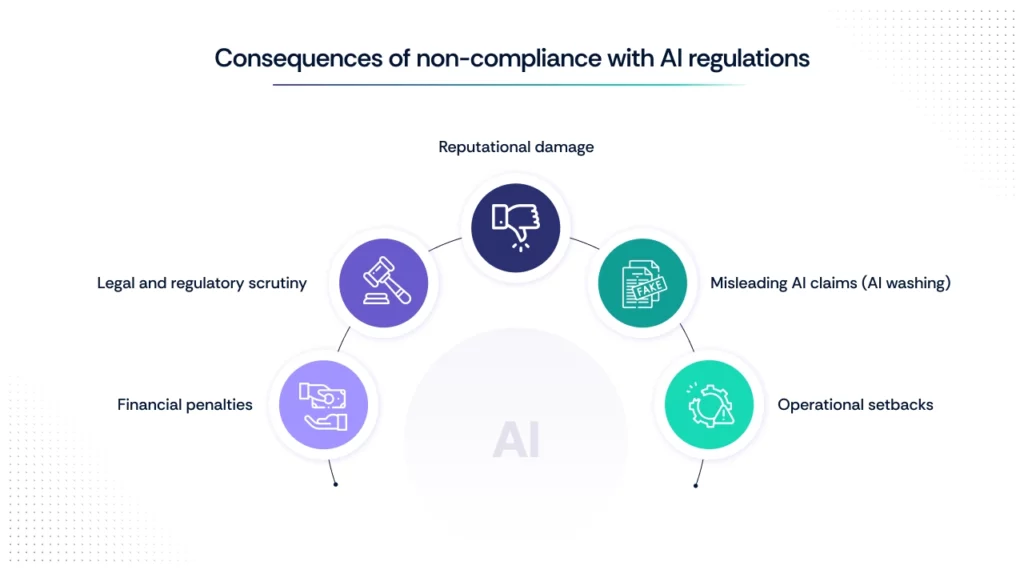

What are the consequences of not complying with AI regulations?

When companies don’t follow the rules around AI, it’s like playing with fire. Sooner or later, it burns — whether it’s through big fines, public backlash, or regulators knocking at the door.

Here’s what non-compliance can cost you, with real-life examples to show it’s not just theory.

1. Financial penalties

Regulators across the globe are starting to enforce AI-related laws with serious fines. These penalties are no longer theoretical.

In December 2024, Italy’s privacy watchdog, Garante, fined OpenAI €15 million. Why? Because ChatGPT was collecting and using people’s data without asking for proper consent. The regulator also flagged that there weren’t enough checks to stop kids from accessing content meant for adults.

Another big one — Clearview AI, a US-based facial recognition company, was fined €30.5 million by Dutch authorities under GDPR. They had scraped billions of photos from the internet to build their AI without getting permission. That didn’t sit well with Europe’s privacy laws.

2. Legal and regulatory scrutiny

Some companies avoid fines but still face intense pressure from regulators, which can lead to product changes or public commitments.

Take HireVue, a company that used facial recognition in AI-powered job interviews. Critics and regulators raised red flags about whether the tech was fair or transparent. HireVue ended up removing facial analysis from its platform to calm the waters.

3. Reputational damage

When people lose trust, it’s hard to win them back.

In May 2025, scammers used deepfake AI to impersonate well-known financial expert Michael Hewson on social media. The fake videos promoted bogus investment schemes, making it seem like the advice was coming straight from him.

Even though Hewson had nothing to do with it, the damage was done. People were misled, and his credibility was questioned. He is now considering legal action against Meta for not taking the fake content down fast enough.

This is a sharp reminder that even when companies do not build the AI, they can still suffer if it is misused in their name.

4. Misleading AI claims (AI washing)

In March 2024, two investment firms — Delphia and Global Predictions — got into hot water with the U.S. Securities and Exchange Commission.

Both were fined a total of $400,000 for making bold claims about their use of AI. Delphia said it used customer data to power AI-driven investment strategies, but in reality, it didn’t. Global Predictions called itself the “first regulated AI financial advisor,” even though it couldn’t back that up.

This was the first time the SEC cracked down on what they called AI washing — when companies hype up their AI use without actually using it. The message was clear: you can’t just throw around the word “AI” and hope no one checks.

5. Operational setbacks

When AI systems don’t meet compliance standards, it’s not just about fines or bad press. Sometimes, the entire operation takes a hit.

For instance, under the EU AI Act, if a high-risk AI system is found to be non-compliant, it can be pulled from the market until it’s fixed. That means halting product launches, pausing services, and scrambling to make things right—all while competitors move ahead.

Even outside the EU, companies are feeling the heat. ZoomInfo, a U.S.-based firm, faced internal pushback when trying to adopt AI tools for compliance tasks. Concerns about data security and regulatory risks led to delays and extra costs. Eventually, they had to implement strict data limitations to move forward.

The takeaway? Skipping over compliance can stall your operations, drain resources, and give your competitors a leg up.

AI is exciting, but it’s not a free-for-all. Failing to play by the rules can cost companies a lot — in money, in time, and in trust. These aren’t one-off stories. They are warning signs for every business using AI without a solid compliance plan in place.

What are some global AI laws, regulations, and standards?

AI is moving fast, and so are the rules trying to keep up with it. Here’s a quick look at how different countries and global bodies are shaping the future of AI through laws, standards, and frameworks.

1. EU AI Act

The world’s first comprehensive EU AI law. It uses a risk-based model to regulate high-risk AI systems in areas like hiring, healthcare, and law enforcement.

2. China’s Generative AI Measures

Targets generative AI tools. It requires providers to conduct security reviews, label AI-generated content, and ensure alignment with state-approved values.

3. ISO/IEC 42001:2023

The first international standard for AI management systems. Helps organizations govern AI responsibly, manage risks, and ensure transparency.

4. NIST AI Risk Management Framework (AI RMF 1.0)

US-developed, but globally respected. NIST AI RMF offers practical guidance to build trustworthy, fair, and explainable AI systems.

5. OECD AI Principles

Endorsed by over 40 countries. High-level guidelines that promote human rights, transparency, accountability, and robustness in AI.

Other countries like Japan, Canada, the UK, India, and Brazil are also drafting their own AI regulations, each with a slightly different take on what “responsible AI” should look like. The global patchwork is still forming, but the direction is clear: AI without oversight is no longer an option.

What are some industries that require AI regulations?

AI regulations are most critical in sectors where automated decisions can directly impact people’s rights, safety, or access to services. Key industries include:

- Financial services (banking, investment, insurance, trading): To prevent bias in lending, fraud detection, and algorithmic trading.

- Legal: To ensure fairness and accuracy in AI-assisted legal research, contract review, and case prediction.

- Software and SaaS: To manage risks in AI-powered features across cloud products and customer-facing apps.

- Enterprise solutions: To ensure responsible AI use in corporate systems like hiring, pricing, or performance evaluation.

- Pharma and healthcare: To safeguard patient safety in diagnostics, treatment, and medical AI tools.

Other sectors like retail, education, and public services are also seeing growing regulatory focus as AI adoption spreads.

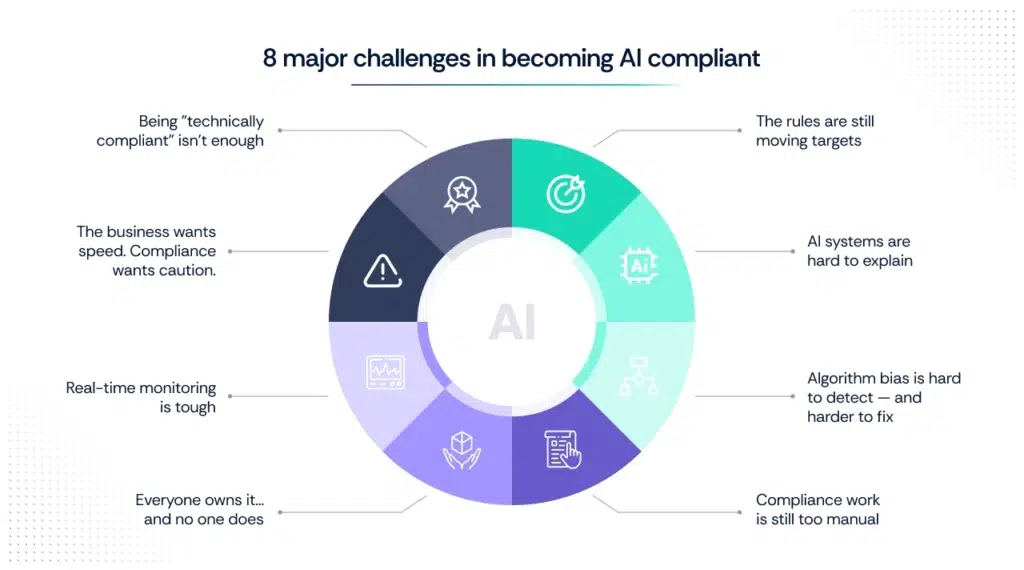

What are the major challenges of becoming AI compliant?

Getting AI compliance right is easier said than done. Most companies are still figuring out how to balance innovation with accountability. And let’s be honest — the rules are changing, the tech is evolving, and the pressure is on.

Here’s where teams often get stuck:

1. The rules are still moving targets

AI regulations are new and not always consistent across regions. What’s acceptable in the US might not fly in the EU or China. Businesses are left guessing what “compliant” really means; and that uncertainty slows down decision-making.

2. AI systems are hard to explain

Many advanced AI models work like black boxes. When regulators ask, “Why did the model make this decision?” teams struggle to provide a clear answer. But explainability isn’t optional anymore, especially in high-stakes areas like healthcare or finance.

3. Algorithm bias is hard to detect — and harder to fix

Bias doesn’t always show up at first glance. It creeps in through training data, feature selection, or model design. And once it’s there, it can quietly skew decisions in hiring, lending, healthcare, or policing. Proving fairness and maintaining it over time is a major challenge.

4. Compliance work is still too manual

Despite all the talk of automation, a lot of AI compliance still lives in spreadsheets and shared drives. That means teams waste time chasing evidence, updating documents, and prepping for audits — often at the last minute.

5. Everyone owns it… and no one does

AI compliance cuts across legal, product, engineering, and risk teams. But without shared responsibility or clear workflows, things fall through the cracks. Who’s in charge of validating training data? Or reviewing third-party AI tools? It gets murky fast.

6. Real-time monitoring is tough

AI systems can change as they learn or adapt to new data. That means a one-time review isn’t enough. Companies need continuous monitoring — not just during audits, but every time a model goes live or updates.

7. The business wants speed. Compliance wants caution.

There’s always pressure to move fast, ship features, and stay ahead of the market. But AI compliance often feels like a brake pedal. Finding that balance between building responsibly and staying competitive is one of the hardest parts.

8. Being “technically compliant” isn’t enough

Even if your AI meets the letter of the law, users might still find it creepy, biased, or unsafe. Compliance is also about earning trust — and that takes transparency, accountability, and good design.

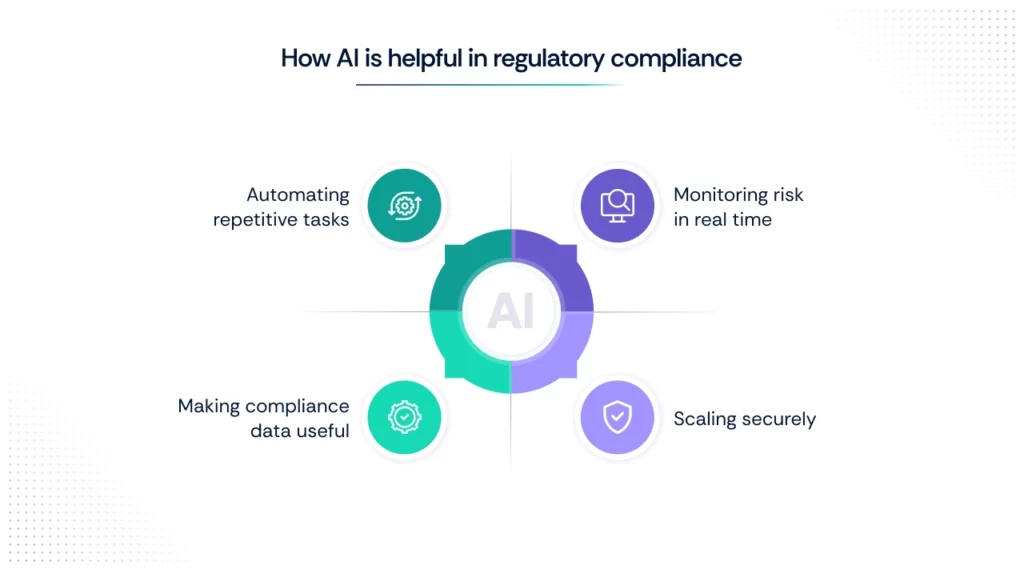

How to use AI in regulatory compliance

Compliance used to mean chasing spreadsheets, digging through evidence folders, and waiting till audit time to discover a control had failed. But with the right use of AI, compliance becomes more proactive, more efficient, cost effective — and a lot less painful.

Here’s how leading teams are using AI-driven compliance to get ahead of the curve:

1. Automating repetitive tasks

AI now takes over tedious tasks like collecting evidence, mapping it to the right controls, and flagging missing pieces — all within your automated regulatory compliance software. This saves time, reduces errors, and keeps you ready year-round.

2. Monitoring risk in real time

Instead of waiting for the next audit, teams are using AI to track, monitor, and manage risk exposure every day. Whether it’s a control failure, an untagged asset, or a misconfigured integration — AI spots the issue before it snowballs.

3. Making compliance data useful

Compliance tools now come with built-in analytics and visual dashboards that show exactly where gaps exist, which controls need attention, and how your posture is improving — or slipping.

4. Scaling securely

As companies grow, so do their risks. AI-powered regtech platforms help maintain oversight across teams, systems, and geographies — without needing to scale your compliance team at the same pace.

Best AI compliance software

Finding the right AI compliance software depends on your company’s size, complexity, and goals. Here’s a quick breakdown of top platforms — and how Scrut stands out for startups and growing businesses.

1. Scrut – Best for startups and mid-sized businesses

Scrut brings AI into every layer of your compliance program — not just one feature. It’s built for fast-growing teams that need to stay audit-ready across multiple frameworks, without hiring large compliance teams.

Key features:

- 1400+ pre-mapped controls across ISO 27001, SOC 2, GDPR, PCI DSS, and more

- 100+ integrations that auto-collect and update evidence daily

- Central dashboard to track posture, risks, and audit progress

- Smart tracking and alerts for failed controls, policy gaps, and real-time risks

- Built-in analytics and reports for management and auditors

- Full support for AI-specific standards like the EU AI Act, ISO 42001, and NIST AI RMF

Scrut is ideal for companies looking to move fast without losing compliance visibility. It’s designed for lean teams who need depth, not complexity.

Start with Scrut and build an AI compliance program that grows with you.

2. IBM OpenPages

Suited for heavily regulated, global organizations. Offers advanced risk analytics, control libraries, and enterprise-grade customization. May require a dedicated admin and higher budgets.

3. ServiceNow GRC

Known for its powerful integration across IT systems. Supports enterprise workflows, but may feel too complex or resource-intensive for smaller teams.

4. OneTrust

Strong in privacy program management, consent tracking, and third-party risk. Often used by privacy teams more than security or compliance leads.

AI regulatory compliance checklist

An AI compliance program isn’t something you want to build on guesswork. Here are some tried-and-tested best practices for an effective AI regulatory program in the form of a checklist. Use this checklist to evaluate whether your organization is building a responsible, audit-ready AI program that meets global compliance expectations.

What are the current trends in AI regulations and guidelines?

AI regulations are evolving fast as governments and industries catch up with real-world AI use. Here are the top trends shaping the space, based on Scrut’s GRC and AI predictions for 2025:

1. Rise of AI governance roles: More organizations are appointing dedicated teams or officers to manage AI risk, compliance, and ethics.

2. Responsible AI goes mainstream: There’s growing focus on fairness, transparency, and accountability in AI systems — not just as a best practice, but as a compliance expectation.

3. Shift toward quantitative risk models: Companies are adopting data-driven methods to measure AI risk, moving away from purely qualitative assessments.

4. Emerging global approaches: Regulations, standards, and frameworks like the EU AI Act, ISO/IEC 42001, and NIST AI RMF are shaping how organizations govern AI responsibly.

5. Crypto and digital assets enter the compliance scope: AI-driven financial tools involving crypto are prompting updates to existing compliance frameworks.

FAQs

Why should AI be regulated?

AI should be regulated to prevent bias, protect privacy, and ensure transparency in how automated decisions are made. Regulations help build trust, enforce accountability, and safeguard users from potential harm caused by opaque or unsafe AI systems.

What happens if AI isn’t regulated?

If AI isn’t regulated, it can lead to biased decisions, data misuse, lack of accountability, and safety risks. This erodes public trust, increases legal exposure, and can cause real-world harm to individuals and communities.

What are the compliance concerns of AI?

AI raises several compliance concerns including lack of transparency, potential bias in algorithms, unauthorized data usage, inadequate documentation, absence of human oversight, and failure to meet evolving legal regulations and standards like the EU AI Act or GDPR. These issues can expose organizations to regulatory fines, reputational damage, and legal liability.

Why is it difficult to regulate AI?

AI is hard to regulate because it evolves rapidly, operates across borders, and often functions as a “black box” — making its decisions hard to explain. Striking the right balance between innovation, accountability, and fairness adds to the complexity.

Does the US have AI regulations?

The US does not have a single, comprehensive federal AI law yet. However, individual states like Colorado have introduced their own AI laws, and federal agencies are issuing guidelines. The regulatory landscape is evolving, with growing debate over balancing innovation with responsible AI use.

Who is responsible for AI compliance in an organization?

- Chief Information Security Officer (CISO)

- Chief Risk Officer (CRO)

- AI Governance or Ethics Lead

- Data Protection Officer (DPO)

- Compliance and Legal Teams

- Engineering and Product Teams (for implementation)

Can AI replace compliance?

No, AI can’t replace compliance. It can support and automate parts of the process — like monitoring risks, collecting evidence, and analyzing data — but human oversight, legal interpretation, and ethical judgment are still essential for a sound compliance program.

Does shadow AI affect compliance?

Yes, shadow AI, meaning the use of AI tools without IT or compliance oversight, can seriously impact compliance. It leads to unmonitored data usage, unvetted algorithms, and gaps in documentation, increasing the risk of violations and regulatory penalties.

What are some AI risk management certifications to train employees?

- MIT Professional Education – AI and Risk Management

- Stanford Online – AI Ethics and Compliance

- CertNexus – Certified Ethical Emerging Technologist (CEET)

- World Economic Forum – AI Governance Alliance resources

- IEEE CertifAIEd

- ISO 42001 (for internal training alignment)

- Coursera – AI for Everyone (by Andrew Ng)

- Reduce manual errors in audit preparation

- Flag policy gaps and outdated documents

How to use AI in risk and compliance?

- Automate evidence collection

- Detect control failures in real time

- Monitor third-party/vendor risks

- Analyze compliance trends with dashboards

- Map controls across multiple frameworks

Table of contents