Choose risk-first compliance that’s always on, built for you.

Go back to blogs

Streamline compliance with generative artificial intelligence

Last updated on

January 10, 2024

6

min. read

Generative artificial intelligence (AI) has taken the world by storm.

Tools like ChatGPT and others have quickly revolutionized how companies do business. By saving time and eliminating repetitive tasks, these new systems will accelerate development and cut costs throughout the global economy.

Governance, risk, and compliance (GRC) is no different.

Capable of rapidly transforming and querying vast amounts of data, large language models (LLM) will help security and compliance teams cut through huge amounts of paperwork. These professionals will be able to use AI tools to inspect and refine existing documentation, reduce the burden of audits, and build customer trust.

With that said, generative AI tools themselves do introduce a host of privacy and security issues themselves. Using them productively and wisely requires:

- Having a policy governing their use.

- Understanding data retention policies as they apply to different usage methods (visual interface versus application programming interface (API), for example).

- Opting out of information sharing whenever appropriate.

Implementing proper controls ahead of time and integrating generative AI tools into existing workflows can improve productivity and security at the same time. Additionally, having a properly-structured base of underlying data is crucial to taking advantage of LLMs strengths when it comes to synthesizing and generating new content.

Below are some examples of how GRC teams can do just that.

Analyze and improve existing compliance documents

ChatGPT and similar large language models are great at doing things where humans fall short. Namely, they are excellent at identifying problems with and suggesting improvements to your existing governance framework.

Check for inconsistencies and gaps between policies

When different authors draft policies and update them on varying timelines, it’s common to see problems like:

- References to documents which the author expected to eventually exist but were never completed. For example, your GRC team might have drafted an overall risk management policy referring to a vendor risk management policy but no one ever got around to creating the latter.

- Unclear decision frameworks. Oftentimes companies will delegate decisions to bodies such as a risk management board, but accidentally forget to identify who will sit on these boards and how often they will meet.

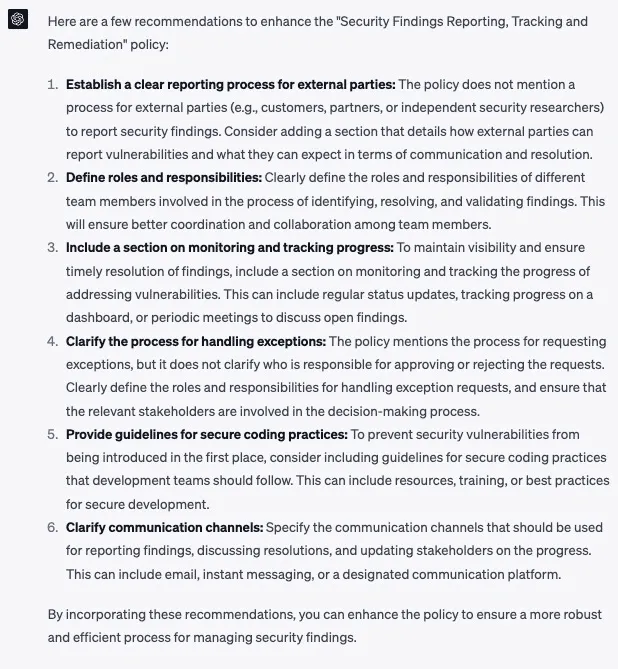

Identify missing controls or ones that can be enhanced

Generative AI can help you to review individual documents and suggest improvements as well. Evaluating an open source example of a vulnerability remediation procedure, ChatGPT provided some excellent tips the authors could use to improve their document:

By using AI to check your work before rolling out policies and procedures, you can save lost time, heartache, and security gaps resulting from incomplete control sets.

Automate and streamline audits

Once your GRC program is up and running, you will likely need to undergo periodic audits under compliance frameworks like SOC 2, ISO 27001, or similar standards. These audits can be disruptive and taxing if not prepared for and handled properly.

AI can help you here as well!

By training an LLM to incorporate your internal data, you can allow your auditors to ask questions quickly and without disrupting your team. Only if the rare case where certain information doesn’t exist or isn’t readily available would human-to-human interaction be necessary.

For example, an auditor using a data loader for:

- Jira or Asana could search for tickets related to specific types of events, allowing for the rapid collection of evidence.

- Google Docs would be able to search through policies and procedures to determine the proper documentation is in place.

- GitHub to determine whether and when certain code changes were made.

On top of saving time for your team, providing audit staff with access to an intuitive and easy to use chatbot can make their experience better as well. Having a happy auditor can only help in making the process less burdensome.

While auditors are almost always under non-disclosure agreement (NDA) with the organizations they review, it still makes sense to limit the access these outside parties receive. If for no other purpose than observing the principle of least privilege, only grant access to necessary data sets and revoke it as soon as the audit is complete.

Build customer trust

A third beneficiary of using AI tools as part of a GRC program are those who actually use your products. Every organization is different, and each buyer will likely have a slightly different way of evaluating your organization’s security.

With a well-structured and organized Trust Vault and the power of LLMs, however, you can both save time and increase confidence in your security program. While saving your team time for higher-value tasks, you can also accelerate your sales efforts.

Because not all prospects or customers are under NDA, though, you’ll need to be especially careful to which data you grant them access. Using publicly-facing material only is probably a good starting point for early experiments.

Auto-generate white papers specific to certain prospects

While having an “off-the-shelf” security white paper is never a bad thing for any organization to have, AI lets you take it to the next level. Using Google’s Bard, for example, you can quickly rewrite one of the company’s own security white papers to address the implications of quantum computing.

AI tools let you customize these documents to customers of different sizes, verticals, and even levels of security sophistication.

Rapidly complete bespoke security questionnaires

Even when provided “self-service” capabilities through your Trust Vault, some prospects will insist that you complete their customized questionnaires, whether in spreadsheet, web portal, or other format.

What would traditionally take hours of painstaking work from highly skilled security team members is now a much simpler task. By providing a generative AI tool with access to an overview of your organization’s security posture as well as the question being asked, you can quickly get a serviceable answer. While there might be some editing required, starting with a 90% solution is far better than beginning from scratch.

Conclusion

No tool is a silver bullet, and generative AI is no different.

In addition to the aforementioned security considerations, it is important to remind those using these applications that they are not infallible. Having a human review all content generated is a critical step of any new system that takes advantage of artificial intelligence.

When properly used, however, they can radically reduce the manual effort required by heavily-taxed GRC teams while at the same time building confidence in external stakeholders like customers and auditors.

If you are interested in seeing how a well-structured GRC platform can let you use generative AI more effectively, then schedule a demo of the Scrut Automation platform now!

Table of contents