Choose risk-first compliance that’s always on, built for you.

Go back to blogs

The growing role of AI in GRC: What engineering leaders need to know

Last updated on

September 16, 2025

min. read

Governance, Risk, and Compliance (GRC) functions were once the sole responsibility of legal, audit, or dedicated compliance teams. But that boundary is rapidly dissolving. As cloud infrastructure, vendor ecosystems, and product decisions increasingly intersect with security and compliance obligations, engineering teams find themselves drawn into the compliance lifecycle, often without the tools or resources to manage it efficiently.

Manual methods (eg, spreadsheets, static controls, and periodic audits) were never designed for the speed and complexity of modern engineering environments. They are slow to adapt, prone to human error, and difficult to scale. And, as frameworks become more demanding and stakeholders expect continuous assurance, these traditional approaches are proving insufficient.

Artificial Intelligence (AI) is beginning to change that, not just by accelerating processes, but by rethinking how compliance tasks are embedded into engineering workflows. What once required hours of manual effort can now be delegated to specialized AI agents. How, let’s see.

Part I: The challenges with traditional GRC in modern engineering environments

For engineering leaders, the growing intersection between product velocity and compliance responsibility is hard to ignore. But the real friction often stems from how outdated and disconnected traditional GRC processes are from the way engineering teams operate today.

1.1 Compliance workflows don’t match engineering velocity

Most compliance processes were designed for quarterly audits, not weekly releases. As engineering teams push code faster, spin up new environments, and integrate dozens of SaaS tools, traditional GRC methods, including spreadsheets, static documentation, and ad-hoc Slack threads, begin to crack under the pressure.

For Heads of Engineering and CTOs, this disconnect often results in a growing backlog of compliance tasks that feel disconnected from the actual product lifecycle. Engineering teams end up context-switching between code and control evidence, slowing down development without improving risk posture.

1.2 Tools that alert, but don’t assist

Security scanners and compliance tools can surface hundreds of findings, from failed controls to misconfigured buckets, but the burden of interpretation and resolution usually falls on the engineering team. These alerts often arrive with little context, no prioritization, and no clear ownership path.

The result? Alert fatigue. Teams tune out issues that don’t seem critical or actionable, which increases the likelihood that high-risk gaps go unresolved. And when real incidents occur, leadership is left questioning whether existing tooling added value or simply generated noise.

1.3 Vendor reviews are a bottleneck to speed

Modern development teams rely on third-party tools to move quickly, but each new integration brings its own security and compliance considerations. Manual vendor assessments, review questionnaires, and security due diligence processes can delay onboarding for days or even weeks.

This creates tension between speed and assurance. Engineers want to integrate tools that unblock progress, while risk and compliance teams require thorough reviews. When these workflows aren’t streamlined, it’s the engineering roadmap that takes the hit.

1.4 Misconfiguration overload with no clear triage path

Cloud environments generate a constant stream of findings, some critical, many not. But traditional compliance tools typically surface all misconfigurations with equal weight, leaving teams with an overwhelming list and no real strategy for where to begin.

Without clear prioritization, engineering teams may spend valuable time fixing low-severity issues while more significant risks go unnoticed. Worse, many findings require investigation just to confirm whether they matter, draining engineering hours that rarely improve security outcomes.

1.5 Audit readiness becomes a recurring scramble

As audit cycles approach, engineering teams are often pulled into retrospective evidence collection, configuration screenshots, and access review summaries. Much of this work is repetitive, manually orchestrated, and removed from day-to-day workflows.

This last-minute rush not only diverts engineering focus but also exposes the organization to gaps and inconsistencies. Without continuous monitoring, audit preparation becomes a disruptive scramble, rather than a reflection of an already secure and compliant environment.

Without continuous monitoring, audit preparation becomes a disruptive scramble, rather than a reflection of an already secure and compliant environment. And the cost of getting this wrong is steep; firms spend almost US$15 million on consequences of non-compliance, which is 2.71× more than they would spend to maintain compliance. (Ascent.ai)

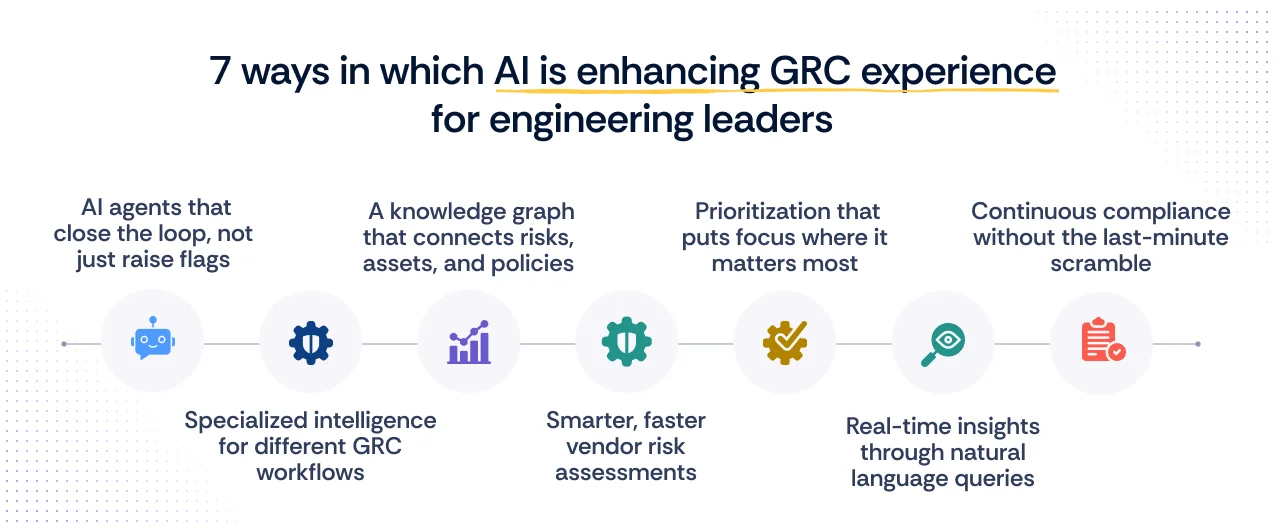

Part II: How AI is reshaping GRC for engineering leaders

Technology leaders know that simply pointing out risks is not enough; they need systems that can act with precision, adapt to the environment, and reduce the operational drag on their teams. This is where AI is making a tangible difference. By moving beyond alerts to intelligent action, AI is redefining how governance, risk, and compliance integrate with engineering workflows.

And while adoption is surging, it’s far from strategic. A recent survey found that only 22% of organizations have a defined AI strategy. Those that do are twice as likely to experience revenue growth from AI, and 3.5× more likely to realize critical AI benefits than those experimenting without a plan (Corporate Compliance Insights, July 2025). For engineering leaders, this highlights that value comes not from dabbling with AI, but from embedding it thoughtfully into GRC workflows.

2.1 AI agents that close the loop, not just raise flags

Traditional tools stop at reporting; AI takes the next step. Instead of leaving engineers with a growing list of alerts, AI agents can generate tickets, assign ownership, and track remediation through to completion. This shift turns compliance from an interrupt-driven burden into a structured, managed workflow that requires far less manual follow-up from engineering teams.

2.2 Specialized intelligence for different GRC workflows

Generic AI models struggle with compliance because they lack domain-specific knowledge. Today’s AI agents, like those at Scrut, are trained to handle targeted tasks such as vendor risk evaluations, cloud misconfiguration analysis, policy interpretation, and access reviews. This specialization ensures outputs are relevant, accurate, and immediately useful to engineering leaders who need precise answers, not vague suggestions.

2.3 A knowledge graph that connects risks, assets, and policies

AI is most effective when it can understand context. By linking assets, controls, policies, vendors, and risks into a knowledge graph, AI can reason more like an auditor or security analyst. Instead of treating findings in isolation, it evaluates their relationships and impact on the broader environment, enabling more confident and accurate decisions.

2.4 Smarter, faster vendor risk assessments

Third-party adoption no longer needs to be slowed by lengthy manual reviews. AI can evaluate inherent vendor risk, generate tailored questionnaires, and flag problematic responses for human review. This accelerates onboarding without compromising diligence, balancing engineering speed with compliance assurance.

2.5 Prioritization that puts focus where it matters most

AI doesn’t just report every misconfiguration; it evaluates their severity, control alignment, and relevance to the business. By highlighting the issues with the greatest impact and suggesting remediation steps, sometimes even in the form of ready-to-use code snippets, engineering teams spend their time fixing what truly matters instead of wading through noise.

Evidence shows that 72% of the most mature organizations are already using AI proactively to track risk, and 44% of them plan further investment in AI-driven risk management in the next 12 months (Corporate Compliance Insights, July 2025). That’s where the market is heading, from surface-level alerts to strategic prioritization.

2.6 Real-time insights through natural language queries

Instead of digging through dashboards, engineering leaders can now ask questions in plain language, such as, “Which vendors have access to production?” or “Show me unresolved high-risk issues.” AI parses controls, logs, and evidence to provide clear, actionable answers in real time, bridging the gap between technical and compliance perspectives.

2.7 Continuous compliance without the last-minute scramble

AI agents continuously review evidence and monitor control status in the background, helping teams maintain a state of ongoing audit readiness. For engineering leaders, this reduces the disruptive “audit season” scramble and instead creates a state of ongoing assurance, compliance that evolves as fast as the systems it governs.

Considerations for engineering leaders while adopting AI

Adopting AI in GRC is not just a technology decision. It requires careful thought about how it integrates with your existing engineering workflows, data practices, and governance models. For CTOs and Heads of Engineering, the following considerations are critical:

1. Workflow integration matters

AI is only effective if it works where your team already operates. Look for solutions that integrate directly with engineering tools such as Jira, GitHub, GitLab, Slack, and cloud platforms like AWS, Azure, and Google Cloud. The closer compliance tasks are embedded into your existing workflows, the less disruption they cause, and the more natural it becomes for teams to address risks as part of their daily work.

2. Data quality defines outcomes

AI systems rely on accurate and well-structured inputs. Logs, asset inventories, policies, and risk registers need to be properly maintained for AI to generate reliable outputs. Poor data quality can lead to irrelevant findings or overlooked risks.

3. Explainability builds trust

Engineering leaders cannot adopt a “black box” approach to compliance. AI outputs should come with clear reasoning, whether that is why a misconfiguration was prioritized, or how a vendor risk score was calculated. Transparency is essential, both for internal confidence and external audits.

4. Balance automation with oversight

AI is highly effective at handling repetitive, context-heavy tasks such as task creation, vendor scoring, or evidence checks. But strategic governance decisions, such as accepting a residual risk or interpreting regulatory intent, must remain with humans. Establishing clear boundaries ensures accountability while still reducing engineering lift.

5. Treat AI as an augmentation, not a replacement

The real value of AI in GRC is in freeing engineering capacity for innovation. By automating what slows teams down, misconfig triage, vendor reviews, evidence collection, AI allows leaders to focus on architectural resilience, product security, and long-term growth.

How Scrut supports AI-powered GRC

Scrut brings AI into compliance through Scrut Teammates, a system of specialized agents designed to work like members of your GRC team. Each agent is tuned for a specific area, including cloud misconfigurations, vendor risk reviews, access workflows, or policy checks, and is coordinated by a supervisory layer to ensure consistent, reliable outcomes.

Rather than adding noise, Scrut Teammates delivers clarity. Risks are prioritized, remediation steps are suggested, and tasks are automatically assigned so that engineers don’t spend hours sifting through alerts. With a compliance knowledge graph at its core, Teammates understands the relationships between your policies, risks, and assets, allowing it to provide context-rich guidance tailored to your environment.

For CTOs and Heads of Engineering, this means fewer compliance distractions, less manual overhead, and the assurance that your systems remain audit-ready while your teams stay focused on innovation.

Ready to see AI in action for your compliance program?

Discover how Scrut Teammates can reduce compliance overhead, strengthen your security posture, and free your engineering team to focus on building.

FAQs

1. Will AI replace a GRC analyst?

No. AI is designed to complement, not replace, GRC analysts. It automates repetitive tasks such as evidence collection, risk prioritization, and vendor assessments, while analysts and compliance leaders remain responsible for judgment calls, governance decisions, and regulatory interpretation.

2. How can organizations use AI for GRC?

Organizations can use AI to streamline compliance workflows by automating evidence gathering, prioritizing risks, evaluating vendor security, and providing natural-language insights. AI helps reduce the burden on engineering teams while maintaining continuous audit readiness.

3. What is the future of GRC with AI?

The future of GRC is moving toward continuous, real-time assurance rather than periodic reviews. AI will play a central role in maintaining ongoing compliance, providing predictive insights, and enabling organizations to scale governance without slowing engineering velocity.

4. What role does AI play in risk management?

AI helps identify, analyze, and prioritize risks more effectively. By leveraging contextual data, AI can flag critical issues, suggest remediation steps, and track their resolution. This reduces alert fatigue and ensures engineering teams focus on risks that truly matter.

5. What are the standards/frameworks for AI risk management?

AI risk management is guided by standards/frameworks, such as ISO/IEC 42001:2023, the EU AI Act, ISO/IEC 22989:2022, HITRUST AI Security Certification, NIST AI Risk Management Framework (AI RMF), and AI Risk-Management Standards Profile for General-Purpose AI Systems (GPAIS), that provide structured approaches for identifying, assessing, and mitigating risks associated with AI systems, ensuring responsible and trustworthy use.

Table of contents