Choose risk-first compliance that’s always on, built for you.

Go back to blogs

Data privacy in 2026: How security leaders are rethinking privacy at scale

Last updated on

January 28, 2026

min. read

Every January, Data Privacy Week brings renewed attention to protecting personal data. The conversations are familiar. The principles are well understood.

What is different in 2026 is how experienced security and compliance leaders actually think and talk about data privacy when no regulator is in the room.

In those conversations, one theme comes up repeatedly: the gap between policy and reality has widened. Systems now move faster than the controls designed to govern them. AI-driven workflows, SaaS sprawl, and automated data pipelines have quietly reshaped where privacy risk is created and how it surfaces.

For many CISOs today, data privacy is no longer something you prove during an audit. It is something your systems either sustain continuously or expose under pressure.

This blog explores how a shift is coming in the way leaders evaluate privacy programs, where execution breaks down, and what effective data privacy looks like in practice in 2026.

How security and compliance leaders are rethinking data privacy in 2026

As data environments accelerate, security and compliance leaders are converging on a shared realization: many of the privacy assumptions that held for the last decade no longer match how systems actually operate.

Rather than debating new principles, leaders are reassessing where privacy breaks down in practice. These perspectives reflect what CISOs are seeing inside their organizations when policies meet real-world scale, automation, and AI-driven behavior.

The following shifts capture how data privacy is being reinterpreted on the ground in 2026.

1. Data privacy has become a systems problem, not just a compliance one

For much of the last decade, data privacy was approached as a governance exercise. Define acceptable use, document controls, and demonstrate compliance.

That approach assumed relatively stable data flows and enough time for human oversight.

In 2026, those assumptions no longer hold.

Data is continuously created, shared, and transformed across connected systems. Privacy failures increasingly resemble system failures, emerging from architecture and behavior rather than isolated violations.

Chuck Brooks, President of Brooks Consulting International, reflects this evolution.

The implication is clear. Privacy posture now depends on how systems are designed and operated, not just how controls are described.

2. Policy can signal confidence while systems tell a different story

Many organizations continue to demonstrate strong privacy intent through policies, training, and audit artifacts. Yet leaders often struggle to gain consistent visibility into where sensitive data resides or how it moves across tools.

This gap does not stem from negligence. It reflects how quickly data estates have expanded across cloud services, analytics platforms, and collaboration tools.

Rather than revealing non-compliance, this environment exposes a deeper issue.

Privacy risk accumulates in places policy was never designed to observe.

Ralph T O., Director at the Institute of Operational Privacy Design, captures this reality succinctly.

In practice, leaders are learning that policy alone cannot compensate for architectural opacity. When systems of record are fragmented, privacy oversight becomes reactive, regardless of intent.

3. Manual privacy processes break under modern volume

The limitations of manual privacy workflows have become increasingly visible.

Email-driven approvals, spreadsheet tracking, and ticket-based handling of data subject requests once felt workable. In 2026, they introduce delay and inconsistency precisely when response speed and accuracy matter most.

Zscaler’s 2025 Data@Risk report provides a concrete signal of this breakdown. Email accounted for nearly 104 million transactions that leaked billions of instances of sensitive data, making it one of the most persistent channels for unintended exposure.

This is not simply a tooling problem. It reflects a structural mismatch between high-volume data environments and human-mediated privacy controls.

Dan Lohrmann, CISO for Public Sector at Presidio, frames the impact in financial and operational terms:

Leaders are not abandoning the process. They are recognizing that processes designed for lower volume and slower systems no longer hold up.

As Sharon Lawrence, Founder of Cyber Socialites, notes:

AI is reshaping how privacy risk appears

AI adoption has accelerated this shift by changing where and how sensitive data is handled.

Personal and sensitive information is increasingly shared within AI-enabled productivity tools, often outside traditional privacy review paths. These interactions happen continuously, leaving little room for retrospective control.

Zscaler’s report highlights this emerging pattern, identifying AI applications such as ChatGPT and Microsoft Copilot as major contributors to data loss incidents, particularly involving sensitive identifiers.

This reframes privacy risk. Instead of appearing first as policy violations, risk surfaces as behavioral patterns within systems.

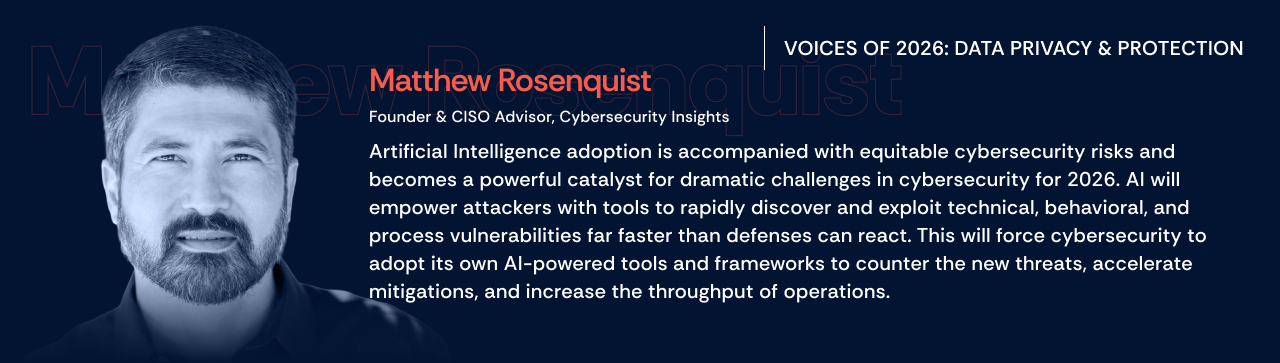

Matthew Rosenquist, Founder and CISO Advisor at Cybersecurity Insights, describes the broader consequence.

In response, leaders are paying closer attention to operational signals rather than relying solely on compliance indicators.

Summarizing the shifts: What experienced leaders are reassessing about data privacy

In 2026, experienced security and compliance leaders are rethinking how data privacy risk is identified, evaluated, and managed in practice.

Rather than treating privacy as a matter of policy coverage, leaders are paying closer attention to how systems actually behave at scale. Privacy challenges are increasingly surfaced through system behavior, process failure under load, and measurable business impact, not through gaps in policy language alone.

Leaders are also reassessing how they evaluate privacy posture. The question is no longer whether controls exist on paper, but whether those controls hold up under real-world operating conditions across complex, automated environments.

This shift does not reject regulation or compliance obligations. Instead, it reflects a growing recognition that privacy outcomes are largely determined upstream, through architectural decisions, workflow design, and operating models, long before audits or regulatory reviews take place.

Table of contents